Verifiable Computing-Power Collaboration Layer

The Verifiable Computing-Power Collaboration Layer is built on top of the communication-encryption layer and the identity-authentication layer, ensuring that every unit of computing power is fully tra

The Verifiable Computing-Power Collaboration Layer in DF Protocol serves as the technical core that connects encrypted communication with economic incentives. This layer receives computing-power contribution data from all participating nodes and verifies the authenticity, timeliness, and ownership of each contribution through a cryptography-driven and smart-contract-based mechanism. In doing so, it enables “computing power as a verifiable asset” with trustworthy attribution.

This layer is composed of the following key modules:

Secure Computing-Power Verification Module(PoSW: Proof of Secure Work)

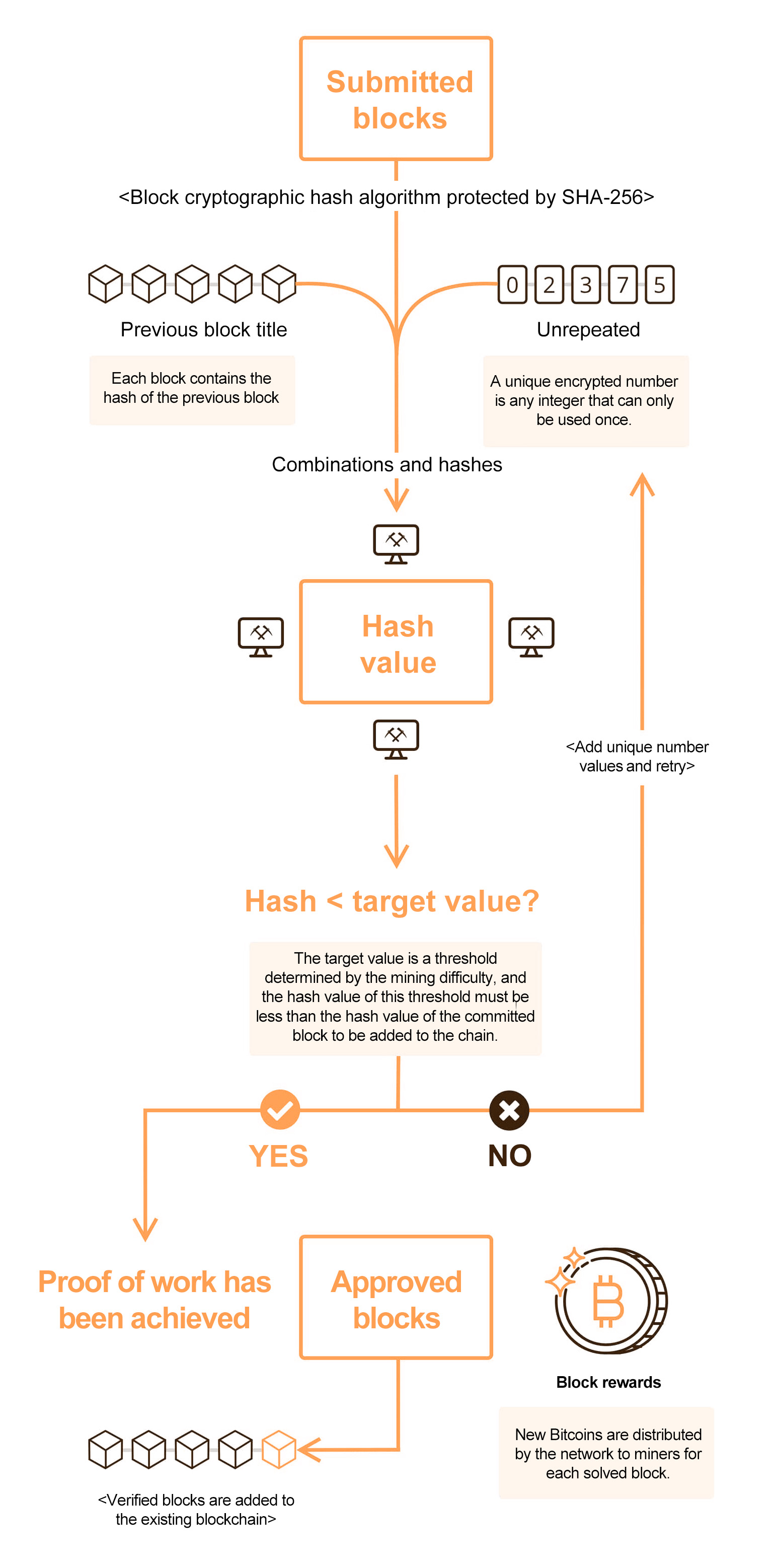

PoSW is one of the core consensus logics of DF Protocol. Its purpose is to determine whether each reported computing-power contribution corresponds to real computation performed by the node.

Compared with traditional mechanisms such as PoW (Proof of Work) or PoS (Proof of Stake), PoSW focuses on the authenticity of computational behavior, rather than computation difficulty or token collateral. By integrating signatures, randomized verification, and on-chain attestation, PoSW ensures that computing-power outputs are verifiable, secure, and tamper-resistant.

1) Design Objectives

Ensure that each computing-power submission is the result of the node’s own independent computation—not forged, copied, or batch-tampered.

Prevent nodes without real computing contributions from receiving rewards, mitigating “fake miners” and “inactive nodes” draining incentives.

Provide a standardized verification pipeline that is extensible and compatible with multi-task structures.

2) Core Verification Workflow

Step 1: Identity Validity Check

The system extracts node_id (DH-ID) from the data packet and queries the on-chain registry to confirm that the node has an active identity.

Step 2: Signature Verification

It reads the signature field and uses the public key registered on-chain to verify the signature over the task digest and timestamp, ensuring that the data is untampered and truly belongs to that node.

Step 3: Timeliness Verification

The protocol checks whether the difference between the reported timestamp and the current block time falls within a predefined T-second validity window, preventing replay attacks and stale data from being counted twice.

Step 4: Task Result Consistency Audit

If the task belongs to a structured computation type (e.g., hashing, inference, model validation), the system performs a second recomputation of the task digest (or uses ZK-based fast consistency proofs) to ensure result authenticity.

Step 5: Random Sampling Challenge

The system introduces a sampling mechanism powered by a VRF (Verifiable Random Function) or an on-chain entropy source to selectively re-validate a subset of node submissions.

If sampling verification succeeds → the submission is marked as valid computing power

If sampling fails → all submissions for that round are invalidated and a reputation penalty is applied

3)Data Structure Verification Model

Each computing-power submission will eventually produce the following structure (mapped on-chain):

This structure is written to the blockchain through the event ComputeProofSubmitted, forming the trustworthy computing-power ledger.

4)Multi-Dimensional Defense Capabilities

Identity Forgery

Rely on on-chain registered DH-ID + signature verification; identity cannot be forged

Signature Attack

All data packets must have signatures matching the registered public key to ensure consistency

Data Forgery

Any task digest must be recomputed and checked within the time window to reject fake data

Sampling Evasion

Sampling rate is non-public and unpredictable to nodes; cheating reduces reputation

Replay Attack

Every submission includes a nonce + on-chain randomness to avoid duplicate accounting

Task Data Packaging Module (Compute Payload Composer)

The task data packaging module is a lightweight builder running locally on DF nodes, dedicated to constructing standardized computing-power reporting packets. This module acts as the bridge that converts off-chain computation results into trustworthy on-chain data formats. It ensures that all transmitted data maintains unified structure, encryption handling, and verifiability, serving as the starting point for secure communication and computing-power verification in the DF architecture.

1) Design Objectives

Encapsulate computation task outcomes into structured, signable, and encryptable data messages;

Provide the basic data unit for computing-power verification, encrypted communication, and on-chain attestation;

Resist risks such as data replay, forgery, tampering, and unclear attribution.

2) Data Packet Structure

The task data packet consists of the following fields:

compute_hash

bytes32

Hash digest of task input + output (e.g., SHA256(task_input) )

timestamp

uint64

Unix timestamp marking when this task was completed, used for timeliness verification

node_id

bytes32

Node’s DH-ID (i.e., SHA256(pubkey_ECDH) )

sig_node

bytes

Node private-key signature over compute_hash + timestamp

payload_meta

bytes

Extra metadata (e.g., task type, input scale), used for compute classification and reward distribution

nonce

bytes12

AES-GCM random IV, used to prevent encryption replay

cipher_data

bytes

Fully encrypted task payload using shared-secret encryption

3) Packaging Workflow

Step 1: Compute Task Digest

After completing the computation, the node generates a digest over the input and output:

Step 2: Construct Additional Metadata

Task type, epoch number, compute-unit size, etc., are placed into metadata:

Step 3: Signature Construction

The node uses its wallet key or DH private key to sign (compute_hash || timestamp):

Step 4: Encrypted Packaging

Using the shared key K_AB derived from the communication layer, the node encrypts

metadata + compute_hash + sig_node with AES-256-GCM:

The final structured data packet is produced and becomes the input to the computing-power verification module.

Replay Attack

Enforce that timestamp must fall within the block window/round; stale data is rejected

Fake Data Injection

All fields are encrypted and signature-verified; signatures must match the private key of the corresponding DH-ID

Content Tampering

AES-GCM includes an authentication tag (auth_tag) to ensure message integrity

Cross-Node Impersonation

Node identity is bound to the public key; signatures and on-chain registration ensure double verification

Encrypted Signature & Reporting Module

The encrypted signature and reporting module is responsible for locally signing the task data packet and transmitting the computing-power data through an encrypted channel to designated verification nodes or relays in the network. This module directly inherits the trust foundation of the communication-encryption layer and identity-authentication layer, ensuring the non-repudiation and tamper-resistance of computing-power data, and providing a structured and secure input source for the verification process.

1) Module Objectives

Use the private key corresponding to the on-chain registered identity to apply non-forgeable signatures to computing-power reporting packets;

Ensure that data attribution matches the node identity (DH-ID), and that signatures and wallet addresses can be cross-verified;

Upload messages through a secure encrypted channel to prevent interception or replacement during transmission;

Support asynchronous resending, batch compression, and multi-path broadcasting to ensure high availability of reporting.

2) Signature Mechanism

The module uses elliptic-curve signature algorithms (such as ECDSA / Ed25519) to sign the encapsulated data. The recommended process is:

The signature is bound to the DH-ID, and the wallet address serves as the identity verification source registered on-chain;

The verification node retrieves the DH-ID → wallet → public key mapping from the on-chain registry to complete signature verification;

The signature may be included within the original data structure or attached as a separate field for external verification.

3) Reporting Paths & Channels

DF provides two reporting modes:

Direct Submit

The node directly sends the signed data packet to the verification server via HTTPS / WebSocket.

p2p Broadcast

The node broadcasts the data packet to network peers through libp2p/gossipsub and waits for propagation.

4) Redundancy, Fault Tolerance, and Asynchronous Retry Mechanism

If verification confirmation is not received within the specified time, an automatic re-submission is triggered;

Each data packet carries a

nonce+seq_idto prevent duplicate on-chain submissions;The number of retries is limited by the smart contract’s “maximum submissions per round” rule to prevent malicious DDoS behavior.

Example submission format (JSON message):

Last updated